Deepfake technology employs artificial intelligence to generate counterfeit videos, images, and audio files that appear realistic. Deepfakes in education can manipulate content and spread misinformation in learning environments. Some students may use deepfake software to alter academic records and cheat in online exams. Schools, and universities struggle to detect these manipulations and prevent misuse in assessments.

According to an August 2023 survey by Statista, 62% of women and 60% of men in the U.S. were concerned about deepfakes. Only one percent of women and three percent of men reported no concern. The increasing accessibility of deepfake tools makes academic fraud more effortless than ever. Educational institutions must strengthen verification processes and invest in A.I. detection technology to maintain integrity.

Deepfakes also pose risks by enabling identity fraud in remote learning environments. Fake student profiles can bypass security checks and gain unauthorized access.

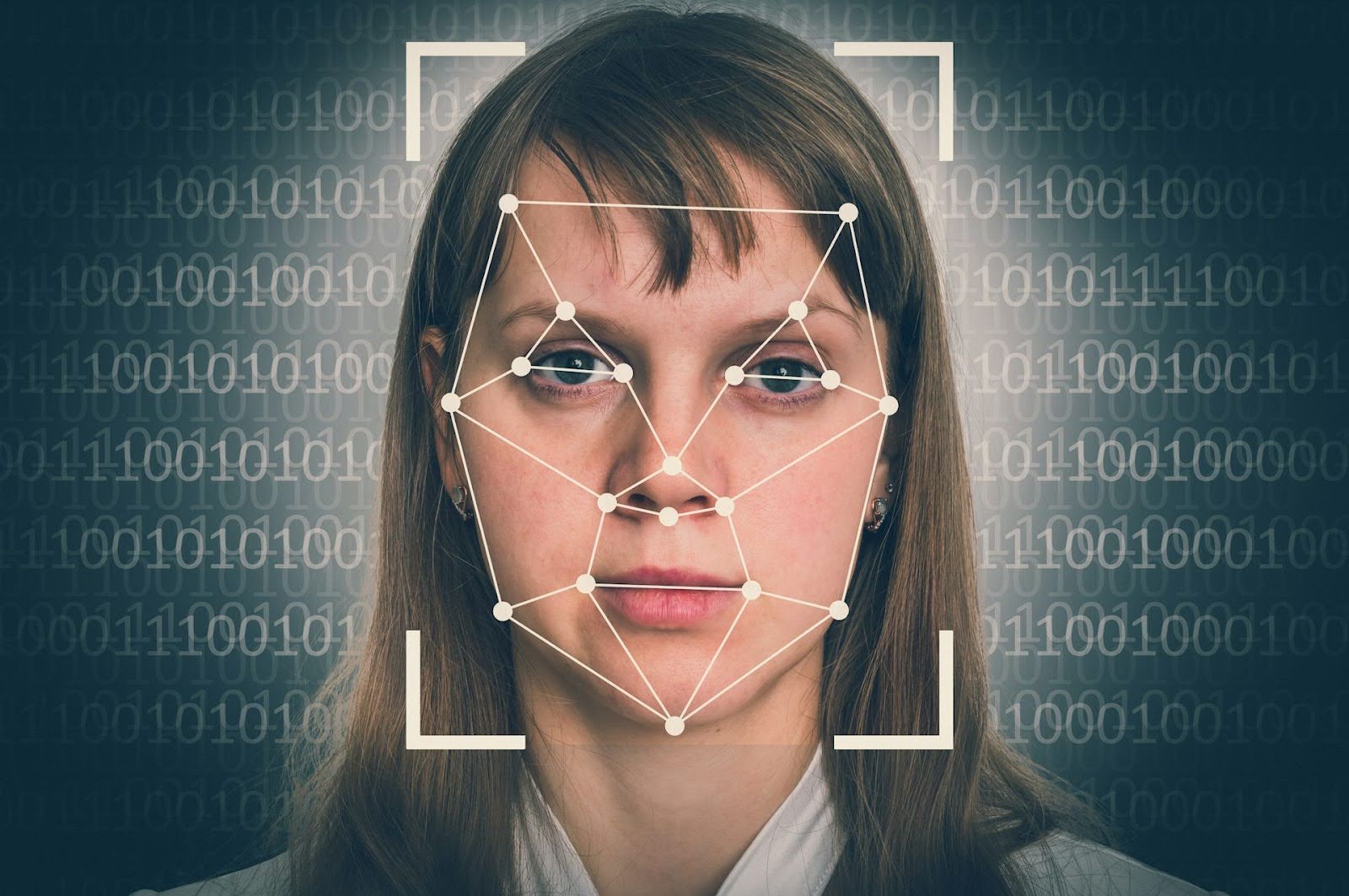

How to Spot Deepfakes: Understanding Deepfake Detection Technology

Deepfake videos exhibit artificial expressions and irregular eye movement, which creates a noticeable artificial appearance. Any faces surrounded by unclear boundaries, along with discrepancies in the lighting pattern, both signal manipulated digital content. Some deepfake images show distorted backgrounds or subtle glitches that reveal digital tampering. Educators must check for these signs when verifying academic materials or student submissions.

AI-powered detection technology analyzes facial movements and audio inconsistencies to spot deepfake content. These tools compare videos with actual data to identify signs of digital alteration. Machine learning models detect subtle pixel changes that the human eye might miss. The advancement of deepfakes requires A.I. detection systems to improve their effectiveness continuously.

Educational institutions and staff members can leverage deepfake detection systems, including Deepware Scanner and Reality Defender. They gain improved academic integrity by investing in modern detection technologies.

How to Stop Deepfakes: The Role of Deepfake Images in Academic Fraud

Deepfake images can alter historical photos and academic materials to spread false information in education. Manipulated visuals may misrepresent events or fabricate data, misleading students and educators. Counterfeit images can be used to fake academic qualifications and avoid identity checks in online education. Educational institutions must stay alert to fight against misinformation and fraud.

The rise of deepfake images makes it easier for people to create fraudulent identities in the education sector. Fraudulent student profiles can access courses and exams without proper checks. Instructors might unknowingly grade assignments from fake identities, which undermines academic integrity. To prevent this from getting worse, educational institutions need stricter authentication measures.

Schools can verify images using AI-powered detection tools and reverse image searches. Regular digital literacy training helps educators and students recognize manipulated visuals effectively.

How to Prevent Misuse: Addressing the Risks of Deepfake Software

Deepfake software lets students change videos and images to cheat on their coursework and tests. Some use it to impersonate others in online tests, making remote verification unreliable. Academic dishonesty increases when deepfake tools create fake credentials or alter research findings. Schools must take action to prevent these unethical practices from spreading further in education.

Preventing deepfake misuse requires strict verification processes and real-time monitoring of online assessments. Multi-step authentication helps confirm student identities and reduces the chances of deception. Schools can also restrict access to deepfake software on institutional networks to limit its use. Educators must stay updated on emerging threats and take proactive steps to combat manipulation.

Institutional policies should include strict consequences for deepfake misuse in academics. Digital literacy programs help students understand the ethical risks of deepfake technology.

Strengthening Education Against Deepfake Technology

Students and educators benefit from ethical A.I. education by understanding deepfake risks. Courses on identification and prevention of A.I.-altered content help learners navigate misinformation. Schools should include A.I. ethics in their curricula to promote responsible tech use. Building digital resilience equips students to face challenges from A.I. deception.

Robust detection and prevention techniques require collaboration among tech companies, educators, and policymakers. A.I. developers need to enhance deepfake detection to combat plagiarism in schools, while institutions need policies to curb A.I. misuse. Open dialogue among these groups fosters a safer digital learning environment.

Headwaters plans to introduce future security features like biometric authentication and ongoing assessments of A.I. platforms. Institutional strategies must be regularly updated to address evolving deepfake threats.

Conclusion

The educational system faces three substantial threats from deepfake technology, which lead to the spreading of incorrect information and academic misconduct, and invalid identity instances. Schools should implement A.I. detection systems together with strict verification protocols to fight this threat. Educational staff needs deepfake identification training to safeguard their students from these harmful incidents, while digital literacy education teaches students about the risks they face ethically. Multi-step authentication together with A.I.-based detection systems can successfully reduce these security risks. The safety of education depends on updated institutional policies, which must combine with strict security measures, and requires educator, tech company, and policymaker collaboration to preserve academic integrity.

SEE ALSO: Boost Your Focus: Ace Your Exams While Fasting During Ramadan